MAGIC: Manipulating Avatars and Gestures to Improve Remote Collaboration

In today's globalized world, remote collaboration is increasingly important. Air travel cost and time overheads, global warming, and more recently, the pandemics made the ability to work remotely very popular, if not essential.

Traditional approaches to remote collaboration, using voice and video, have been used for decades. However, these technologies neglect essential aspects of interpersonal non-verbal communication such as gaze, body posture, gestures to indicate objects referred to in speech (deictic gestures), or even how people use the space and position themselves when communicating (i.e. proxemics). These limitations negatively affect how remote people interact with a local user, especially for operations that require 3D spatial referencing and action demonstration.

Figure 1: Collaborating remotely in a shared 3D workspace: A) Veridical Face-to-face Remote Meeting: Kate and George can see each other but have opposing points-of-view of the workspace. Kate indicates the optimal position for the component she is designing using proximal pointing gestures. George does not understand the location Kate is referring to as it is occluded. Kate needs to explain it through words or ask George to come by her side, which brings added complexity to the collaboration task. B) MAGIC Remote Meeting: Kate and George both share the ”illusion” of standing on opposite sides of the workspace but are in fact sharing the same point-of-view. Kate’s representation is manipulated so that George sees her arm pointing to the location she intends to convey. George understands where she is referring to, without any added effort.

Mixed Reality (MR) is a key technology for enabling effective remote collaboration. Fields such as engineering, architecture, and medicine already take advantage of MR approaches for 3D models’ analysis. Remote collaboration through MR allows teams to meet in life-sized representations and discuss topics as if sharing the same office. This enables a high level of co-presence and allows a more rigorous spatial comprehension of the 3D models they are working on. Indeed, previous research found that for a remote meeting to be closer to a co-located experience, it should rely on a real size portrayal of remote people to maintain the sense of “being there”. Furthermore, people perform tasks better when communicating via full-body gestures.

People use virtual environments and communicate through life-sized avatars to collaborate as if they were in the same room. One common task in this setup is to work with 3D models, like designing prosthetics, vehicles, or buildings. However, talking about these 3D models face-to-face can be challenging. There are issues like misunderstandings, objects blocking the view, and different perspectives, which make it harder to work together effectively and can lead to mistakes.

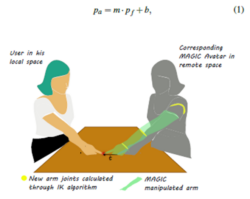

Figure 2: Manipulated avatar (right) corresponding to a pointing user in his local space (left). Transformation~t is calculated through the difference in position from the remote collaborator’s fingertips in his local space (left), and the remote avatar’s fingertips after the mirroring process (right - grey).~t is applied to the remote avatar’s

fingertip in combination with IK algorithm to calculate new arm joint’s positions.

To overcome these challenges, we've come up with a new solution called MAGIC. This approach helps people understand and coordinate their pointing gestures when working with 3D content in a face-to-face virtual space. It makes sure that what one person is pointing at is accurately represented for the other person. To measure how well people understand each other when using pointing gestures, we created a new measure called "pointing agreement."

The results of a study we conducted show that MAGIC significantly improves how well people agree on what they're pointing at when working face-to-face in virtual 3D environments.

This not only helps people feel like they are in the same place (co-presence) but also enhances their awareness of what's happening in the shared space. We believe that MAGIC makes remote collaboration easier and helps people communicate and understand each other better.

Figure 3: Setup for the experiment: two users in different tracked

stations wearing an Oculus Rift HMD and using Touch Controllers.

Moderator was present at all times.